AI: Xbot

Model Context Protocol (MCP) Server

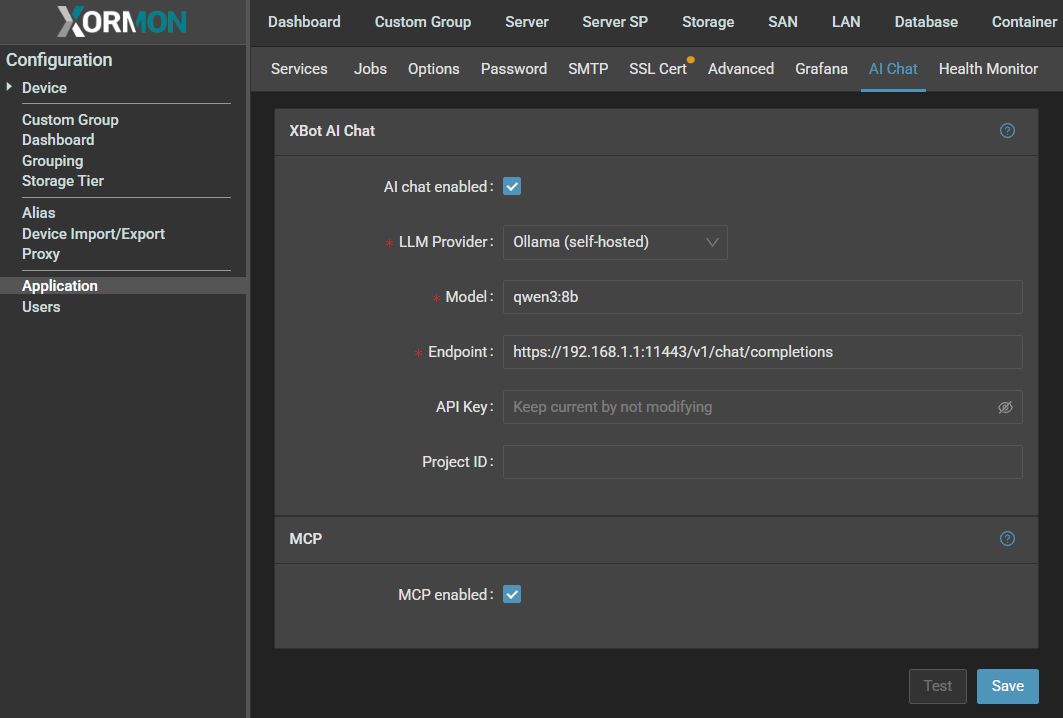

The product allows you to enable an integrated MCP (Model Context Protocol) Server, which provides secure and controlled sharing of all available internal tools with external AI chat systems.Once the MCP Server is enabled, external AI clients can access the complete toolset while still operating within defined security and permission boundaries.

This feature simplifies automation and integration by allowing AI-driven workflows to interact directly with your product’s capabilities, while maintaining a clear separation between internal logic and external interfaces.

Enabling the MCP Server can be done through Settings ➡ Application ➡ AI Chat and requires no additional deployment steps.

|

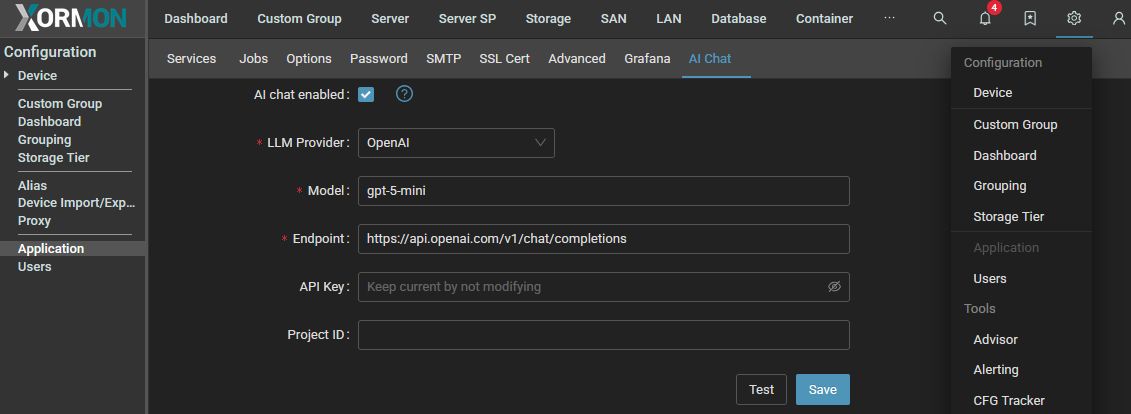

Xormon allows you to seamlessly connect your own Large Language Model (LLM) or third-party providers (such as OpenAI) directly into the platform.

This enables you to leverage the power of conversational AI while giving the model controlled access to Xormon’s data and capabilities.

How It Works

Xormon uses a tool calling mechanism that exposes selected application functions to the LLM. Instead of granting direct access to raw data, the model interacts with Xormon through predefined tools. These tools can query data, perform actions, and return structured results, which the LLM can then interpret and present to the user in a natural, conversational format.In practice, this means:

You can connect your own hosted model or integrate with a provider like OpenAI.The LLM can call only the functions you explicitly expose.

Results are returned in a structured way, ensuring reliable and safe integration.

The model handles presentation, while Xormon ensures secure and accurate data retrieval.

Benefits

- Flexibility – Choose any LLM provider or use your own deployment.

- Security – Fine-grained control over which functions the model can call.

- Consistency – Data always comes from Xormon’s verified sources.

- Seamless UX – Users interact naturally with the model while getting precise results.

Example Use Cases

Querying your infrastructure to see which servers or virtual machines are running, their state, and health status.Searching and filtering resources based on parameters like WWN, alerts, or performance metrics.

Formatting output into user-friendly structures, such as CSV files, for further analysis.

Tested LLM models

- Ollama

- OpenAI

- Deepseek

- WatsonX

Security implications

The attached AI does not scan the XorMon database.It is only used for translating words and sentences into queries and providing the output.

Configuration

|